Janis Translate - Migrating CWL / Galaxy to Nextflow¶

Anticipated workshop duration when delivered to a group of participants is 3.5 hours.

For queries relating to this workshop, contact Melbourne Bioinformatics (bioinformatics-training@unimelb.edu.au).

Overview¶

Topic¶

- Genomics

- Transcriptomics

- Proteomics

- Metabolomics

- Statistics and visualisation

- Structural Modelling

- Basic skills

Skill level¶

- Beginner

- Intermediate

- Advanced

The workshop is conducted in a Unix environment.

Command line experience is required.

Prior experience with developing, running, and troubleshooting Nextflow workflows is strongly recommended.

Description¶

Bioinformatics workflows are critical for reproducibly transferring methodologies between research groups and for scaling between computational infrastructures. Research groups currently invest a lot of time and effort in creating and updating workflows; the ability to translate from one workflow language into another can make them easier to share, and maintain with minimal effort. For example, research groups that would like to run an existing Galaxy workflow on HPC, or extend it for their use, might find translating the workflow to Nextflow more suitable for their ongoing use-cases.

Janis is a framework that provides an abstraction layer for describing workflows, and a tool that can translate workflows between existing languages such as CWL, WDL, Galaxy and Nextflow. Janis aims to translate as much as it can, leaving the user to validate the workflow and make small manual adjustments where direct translations are not possible. Originating from the Portable Pipelines Project between Melbourne Bioinformatics, the Peter MacCallum Cancer Centre, and the Walter and Eliza Hall Institute of Medical Research, this tool is now available for everyone to use.

This workshop provides an introduction to Janis and how it can be used to translate Galaxy and CWL based tools and workflows into Nextflow. Using hands-on examples we’ll step you through the process and demonstrate how to optimise, troubleshoot and test the translated workflows.

Section 1 covers migration of CWL tools / workflows to Nextflow.

Section 2 covers migration of Galaxy tool wrappers / workflows to Nextflow.

Learning Objectives¶

By the end of the workshop you should be able to: - Recognise the main aspects and benefits of workflow translation - Use Janis to translated Galaxy / CWL tools & workflows to Nextflow - Configure Nextflow to run translated tools & workflows - Troubleshoot translated Nextflow tools & workflow - Adjust the translated Nextflow tools / workflows & complete missing translations manually

Required Software¶

- IDE of your choosing (we use VS Code in this workshop).

- Refer back to the setup instructions document provided if needed.

Required Data¶

- Sample data will be provided on the compute resource.

Author Information¶

Written by: Grace Hall

Melbourne Bioinformatics, University of Melbourne

Created/Reviewed: May 2023

Contents¶

This workshop has 2 main sections.

We will translate CWL and Galaxy tools and workflows using janis-translate, then will do manual adjustments & run on sample data to check validity.

Section 1: CWL → Nextflow

- Section 1.1: Samtools Flagstat Tool

- Section 1.2: GATK HaplotypeCaller Tool

- Section 1.3: Align Sort Markdup Workflow

Section 2: Galaxy → Nextflow

- Section 2.1: Samtools Flagstat Tool

- Section 2.2: Limma Voom Tool

- Section 2.3: RNA-Seq Reads to Counts Workflow

Section 1.1: Samtools Flagstat Tool (CWL)¶

Introduction¶

This section demonstrates translation of a basic samtools flagstat tool from CWL to Nextflow using janis translate.

The CWL tool used in this section - samtools_flagstat.cwl - is taken from the McDonnell Genome Institute (MGI) analysis-workflows repository.

This resource stores publically available analysis pipelines for genomics data.

It is a fantastic piece of research software, and the authors thank MGI for their contribution to open-source research software.

The underlying software run by this tool - Samtools Flagstat - displays summary information for an alignment file.

Janis Translate¶

Downloading Janis Translate

In this workshop we will use a singularity container to run janis translate.

Containers are great because they remove the need for package managers, and guarantee that the software can run on any machine.

Singularity is already set up on your VM.

Run the following command to pull the janis image:

singularity pull janis.sif docker://pppjanistranslate/janis-translate:0.13.0

Check your image by running the following command:

singularity exec ~/janis.sif janis translate

If the image is working, you should see the janis translate helptext.

Downloading Training Data and Tool / Workflow Source Files

For this workshop we will fetch all needed data from zenodo using wget.

This archive contains source CWL / Galaxy files, sample data, and finished translations as a reference.

Run the following commands to download & uncompress the zenodo archive:

wget https://zenodo.org/record/8052348/files/data.tar.gz

tar -xvf data.tar.gz

Inside the data folder we have the following structure:

data

├── final # finalised translations for your reference

│ ├── cwl

│ └── galaxy

├── sample_data # sample data to test nextflow processes / workflows

│ ├── cwl

│ └── galaxy

└── source # CWL / Galaxy files we will translate

├── cwl

└── galaxy

The test data provided in data/sample_data is also available at /cvmfs/data.biocommons.aarnet.edu.au/training_materials/MelbBio_training/Janis_0723/sample_data/

Running Janis Translate¶

To translate a tool / workflow, we use janis translate.

janis translate --from <src> --to <dest> <filepath>

The --from argument specifies the workflow language of the source file(s), and --to specifies the destination we want to translate to.

The <filepath> argument is the source file we will translate.

In this section we are translating a single CWL CommandLineTool, so we will only produce a single nextflow file.

In this section, we want to translate CWL -> Nextflow, and our source CWL file is in the data folder we just downloaded from zenodo.

It’s path is data/source/cwl/samtools_flagstat.cwl.

Run the following command:

singularity exec ~/janis.sif janis translate --from cwl --to nextflow data/source/cwl/samtools_flagstat.cwl

You will see a folder called translated/ appear, and a nextflow process called samtools_flagstat.nf will be present inside.

Manual Adjustments¶

The translated/samtools_flagstat.nf file should be similar to the following:

nextflow.enable.dsl = 2

process SAMTOOLS_FLAGSTAT {

container "quay.io/biocontainers/samtools:1.11--h6270b1f_0"

input:

path bam

output:

path "${bam[0]}.flagstat", emit: flagstats

script:

def bam = bam[0]

"""

/usr/local/bin/samtools flagstat \

${bam} \

> ${bam}.flagstat \

"""

}

We can see that this nextflow process has a single input, a single output, and calls samtools flagstat on the input bam.

We can also see that a container image is available for this tool. In the next section we will run this process using some sample data and the specified container.

This translation is correct for the samtools_flagstat.cwl file and needs no adjusting.

Have a look at the source CWL file to see how they match up.

Note:

def bam = bam[0]in the script block is used to handle datatypes with secondary files.

Thebaminput is an indexed bam type, so requires a.baifile to also be present in the working directory alongside the.bamfile.

For this reason thebaminput is supplied as an Array with 2 files - the.bamand the.bai.

Here thedef bam = bam[0]is used so thatbamrefers to the.bamfile in that Array.

Running Samtools Flagstat as a Workflow¶

Collecting Process Outputs

Let’s add a publishDir directive to our translated process so that we can capture the outputs of this process.

process SAMTOOLS_FLAGSTAT {

container "quay.io/biocontainers/samtools:1.11--h6270b1f_0"

publishDir "./outputs" <-

....

}

Nextflow allows us to capture the outputs created by a process using the publishDir directive seen above.

Setting up nextflow.config

To run this process, we will set up a nextflow.config file and add some lines to the top of our process definition to turn it into a workflow.

Create a new file called nextflow.config in the translated/ folder alongside samtools_flagstat.nf.

For this workshop, we are using sample data located at /home2/training/data/sample_data/.

Copy and paste the following code into your nextflow.config file:

nextflow.enable.dsl = 2

singularity.enabled = true

singularity.cacheDir = "$HOME/.singularity/cache"

params {

bam = [

'/home2/training/data/sample_data/cwl/2895499223_sorted.bam',

'/home2/training/data/sample_data/cwl/2895499223_sorted.bam.bai',

]

}

This tells nextflow how to run, and sets up an input parameter for our indexed bam input.

The bam parameter is a list which provides paths to the .bam and .bai sample data we will use to test the nextflow translation. From here, we can refer to the indexed bam input as params.bam in other files.

NOTE

nextflow.enable.dsl = 2ensures that we are using the dsl2 nextflow syntax which is the current standard.

singularity.enabled = truetells nextflow to run processes using singularity. Oursamtools_flagstat.nfhas a directive with the formcontainer "quay.io/biocontainers/samtools:1.11--h6270b1f_0"provided, so it will use the specified image when running this process.

singularity.cacheDir = "$HOME/.singularity/cache"tells nextflow where singularity images are stored.

Nextflow will handle the singularity image download and stored it in the cache specified above. If you’d like to use an already available container, you can modifysamtools_flagstat.nfcontainer directive tocontainer "/cvmfs/singularity.galaxyproject.org/all/samtools:1.11--h6270b1f_0".

The test data used above is also available at/cvmfs, and can be accessed as follow:bam=['/cvmfs/data.biocommons.aarnet.edu.au/training_materials/MelbBio_training/Janis_0723/sample_data/cwl/2895499223_sorted.bam', '/cvmfs/data.biocommons.aarnet.edu.au/training_materials/MelbBio_training/Janis_0723/sample_data/cwl/2895499223_sorted.bam.bai'].

Creating Workflow & Passing Data

Now that we have the nextflow.config file set up, we will add a few lines to samtools_flagstat.nf to turn it into a workflow.

Copy and paste the following lines at the top of samtools_flagstat.nf:

ch_bam = Channel.fromPath( params.bam ).toList()

workflow {

SAMTOOLS_FLAGSTAT(ch_bam)

}

The first line creates a nextflow Channel for our bam input and ensures it is a list.

The Channel.toList() part collects our files into a list, as both the .bam and .bai files must be passed together.

The params.bam global variable we set up previously is used to supply the paths to our sample data.

The new workflow {} section declares the main workflow entry point.

When we run this file, nextflow will look for this section and run the workflow contained within.

In our case, the workflow only contains a single task, which runs the SAMTOOLS_FLAGSTAT process defined below the workflow section. The single SAMTOOLS_FLAGSTAT input is being passed data from our ch_bam channel we declared at the top of the file.

Running Our Workflow

Ensure you are in the translated/ working directory, where nextflow.config and samtools_flagstat.nf reside.

cd translated

To run the workflow using our sample data, we can now write the following command:

nextflow run samtools_flagstat.nf

Nextflow will automatically check if there is a nextflow.config file in the working directory, and if so will use that to configure itself. Our inputs are supplied in nextflow.config alongside the dsl2 & singularity config, so it should run without issue.

Once completed, we can check the outputs/ folder to view our results.

If everything went well, there should be a file called 2895499223_sorted.bam.flagstat with the following contents:

2495 + 0 in total (QC-passed reads + QC-failed reads)

0 + 0 secondary

1 + 0 supplementary

0 + 0 duplicates

2480 + 0 mapped (99.40% : N/A)

2494 + 0 paired in sequencing

1247 + 0 read1

1247 + 0 read2

2460 + 0 properly paired (98.64% : N/A)

2464 + 0 with itself and mate mapped

15 + 0 singletons (0.60% : N/A)

0 + 0 with mate mapped to a different chr

0 + 0 with mate mapped to a different chr (mapQ>=5)

Conclusion¶

In section 1.1 we explored how to translate a simple CWL tool to a Nextflow process.

If needed, you can check the final/cwl/samtools_flagstat folder in the zenodo data/ directory.

This contains the files we created in this section for your reference.

We will build on our knowledge in section 1.2 by translating a more complex CWL CommandLineTool definition: GATK HaplotypeCaller.

Section 1.2: GATK HaplotypeCaller Tool (CWL)¶

Introduction¶

Now that we have translated a simple CWL CommandLineTool, let’s translate a more real-world CommandLineTool.

This section demonstrates translation of a gatk HaplotypeCaller tool from CWL to Nextflow using janis translate.

The source CWL file used in this section is taken from the McDonnell Genome Institute (MGI) analysis-workflows repository.

This repository stores publically available analysis pipelines for genomics data.

It is a fantastic piece of research software, and the authors thank MGI for their contribution to open-source research software.

The software tool encapsulated by the source CWL - gatk_haplotype_caller.cwl - displays summary information for an alignment file.

Janis Translate¶

As in section 1.1, we will use janis-translate to translate our CWL CommandLineTool to a Nextflow process.

First, let’s make sure we’re back in the main training directory

cd /home2/training

This time, since we already have a translated/ folder, we will supply the -o argument to janis-translate to specify an output directory.

singularity exec ~/janis.sif janis translate -o haplotype_caller --from cwl --to nextflow data/source/cwl/gatk_haplotype_caller.cwl

You will see a folder called haplotype_caller/ appear, and a nextflow process called gatk_haplotype_caller.nf will be present inside.

Manual Adjustments¶

The haplotype_caller/gatk_haplotype_caller.nf file should be similar to the following:

nextflow.enable.dsl = 2

process GATK_HAPLOTYPE_CALLER {

container "broadinstitute/gatk:4.1.8.1"

input:

path bam

path reference

path dbsnp_vcf, stageAs: 'dbsnp_vcf/*'

val intervals

val gvcf_gq_bands

val emit_reference_confidence

val contamination_fraction

val max_alternate_alleles

val ploidy

val read_filter

output:

tuple path("output.g.vcf.gz"), path("*.tbi"), emit: gvcf

script:

def bam = bam[0]

def dbsnp_vcf = dbsnp_vcf[0] != null ? "--dbsnp ${dbsnp_vcf[0]}" : ""

def reference = reference[0]

def gvcf_gq_bands_joined = gvcf_gq_bands != params.NULL_VALUE ? "-GQB " + gvcf_gq_bands.join(' ') : ""

def intervals_joined = intervals.join(' ')

def contamination_fraction = contamination_fraction != params.NULL_VALUE ? "-contamination ${contamination_fraction}" : ""

def max_alternate_alleles = max_alternate_alleles != params.NULL_VALUE ? "--max_alternate_alleles ${max_alternate_alleles}" : ""

def ploidy = ploidy != params.NULL_VALUE ? "-ploidy ${ploidy}" : ""

def read_filter = read_filter != params.NULL_VALUE ? "--read_filter ${read_filter}" : ""

"""

/gatk/gatk --java-options -Xmx16g HaplotypeCaller \

-R ${reference} \

-I ${bam} \

-ERC ${emit_reference_confidence} \

${gvcf_gq_bands_joined} \

-L ${intervals_joined} \

${dbsnp_vcf} \

${contamination_fraction} \

${max_alternate_alleles} \

${ploidy} \

${read_filter} \

-O "output.g.vcf.gz"

"""

}

We can see that this nextflow process has a multiple inputs, single output, and calls gatk HaplotypeCaller using the input data we supply to the process.

Notes on translation:

(1)def bam = bam[0]

This pattern is used in the script block to handle datatypes with secondary files.

Thebaminput is an indexed bam type, so requires a.baifile to also be present in the working directory.

To facilitate this, we supply thebaminput as a tuple of 2 files:

[filename.bam, filename.bam.bai].

def bam = bam[0]is used so when we reference${bam}in the script body, we are refering to the.bamfile in that list.

(2)def ploidy = ploidy != params.NULL_VALUE ? "-ploidy ${ploidy}" : ""

This is how we handle optional val inputs in nextflow.

Nextflow doesn’t like null values to be passed to process inputs, so we use 2 different tricks to make optional inputs possible.

Forvalinputs we set up aNULL_VALUEparam innextflow.configwhich we use as a placeholder.

Forpathinputs (ie files and directories) we set up a null file rather than passing null directly.

This ensures that the file is staged correctly in the working directory when an actual filepath is provided.

The format above is a ternary operator of the formdef myvar = cond_check ? cond_true : cond_false.

We are redefining theploidystring variable so that it will be correctly formatted when used in the script block.

If we supply an actual value, it will take the form"-ploidy ${ploidy}".

If we supply the placeholderparams.NULL_VALUEvalue, it will be a blank string"".

(3)def intervals_joined = intervals.join(' ')

Templates ourintervalslist of strings to a single string.

Each item is joined by a space: eg["hello", "there"]->"hello there".

(4)def dbsnp_vcf = dbsnp_vcf[0] != null ? "--dbsnp ${dbsnp_vcf[0]}" : ""

Same as (2) except uses a different check for null value, and selects the primary.vcf.gzfile.

As thepath dbsnp_vcfinput is optional and consists of a.vcf.gzand.vcf.gz.tbifile,dbsnp_vcf[0] != nullchecks if the primary.vcf.gzfile is present.

If true, it templates a string we can use in the script body to provide the required argument:--dbsnp ${dbsnp_vcf[0]}.

If false, it templates an empty string.

This ensures that when we use${dbsnp_vcf}in the script body, it will be formatted correctly for either case.

This translation is correct for the gatk_haplotype_caller.cwl file and needs no adjusting.

Have a look at the source CWL file to see how they match up.

Running GATK HaplotypeCaller as a Workflow¶

Collecting Process Outputs

Let’s add a publishDir directive to our translated process so that we can capture the outputs of this process.

process GATK_HAPLOTYPE_CALLER {

container "broadinstitute/gatk:4.1.8.1"

publishDir "./outputs"

...

}

Nextflow allows us to capture the outputs created by a process using the publishDir directive seen above.

NOTE

Ourgatk_haplotype_caller.nfhas a container directive with the formcontainer "broadinstitute/gatk:4.1.8.1"provided, so Nextflow will handle this singularity image download and will use the specified image when running this process (provided thatsingularity.enabledis set totruein the nextflow config).

If you’d like to use an already available container, you can modify this container directive tocontainer "/cvmfs/singularity.galaxyproject.org/all/gatk4:4.1.8.1--py38_0".

Setting up nextflow.config

To run this process, we will set up a nextflow.config file and add some lines to the top of our process definition to turn it into a workflow.

Create a new file called nextflow.config in the haplotype_caller/ folder alongside gatk_haplotype_caller.nf.

Copy and paste the following code into your nextflow.config file:

nextflow.enable.dsl = 2

singularity.enabled = true

singularity.cacheDir = "$HOME/.singularity/cache"

params {

NULL_VALUE = 'NULL'

bam = [

'/home2/training/data/sample_data/cwl/2895499223_sorted.bam',

'/home2/training/data/sample_data/cwl/2895499223_sorted.bam.bai',

]

dbsnp = [

'/home2/training/data/sample_data/cwl/chr17_test_dbsnp.vcf.gz',

'/home2/training/data/sample_data/cwl/chr17_test_dbsnp.vcf.gz.tbi',

]

reference = [

'/home2/training/data/sample_data/cwl/chr17_test.fa',

'/home2/training/data/sample_data/cwl/chr17_test.dict',

'/home2/training/data/sample_data/cwl/chr17_test.fa.fai',

]

gvcf_gq_bands = NULL_VALUE

intervals = ["chr17"]

emit_reference_confidence = 'GVCF'

contamination_fraction = NULL_VALUE

max_alternate_alleles = NULL_VALUE

ploidy = NULL_VALUE

read_filter = NULL_VALUE

}

file inputs

The bam parameter is a list which provides paths to the .bam and .bai sample data we will use to test the nextflow translation. From here, we can refer to the indexed bam input as params.bam in other files. The dbsnp and reference params follow this same pattern.

non-file inputs

We also set up a NULL_VALUE param which we use as a placeholder for a null value.

In this case we are providing null values for the gvcf_gq_bands, contamination_fraction, max_alternate_alleles, ploidy and read_filter inputs as they are all optional.

Creating Workflow & Passing Data

Now that we have the nextflow.config file set up, we will add a few lines to gatk_haplotype_caller.nf to turn it into a workflow.

Copy and paste the following lines at the top of gatk_haplotype_caller.nf:

ch_bam = Channel.fromPath( params.bam ).toList()

ch_dbsnp = Channel.fromPath( params.dbsnp ).toList()

ch_reference = Channel.fromPath( params.reference ).toList()

workflow {

GATK_HAPLOTYPE_CALLER(

ch_bam,

ch_reference,

ch_dbsnp,

params.intervals,

params.gvcf_gq_bands,

params.emit_reference_confidence,

params.contamination_fraction,

params.max_alternate_alleles,

params.ploidy,

params.read_filter,

)

}

The first 3 lines create nextflow Channels for our bam, dbsnp, and reference inputs and ensures they are lists.

The Channel.toList() aspect collects our files into a list, as the primary & secondary files for these datatypes must be passed together as a tuple.

The params.bam, params.dbsnp and params.reference global variables we set up previously are used to supply the paths to our sample data for these channels.

The new workflow {} section declares the main workflow entry point.

When we run this file, nextflow will look for this section and run the workflow contained within.

In our case, the workflow only contains a single task, which runs the GATK_HAPLOTYPE_CALLER process defined below the workflow section. We call GATK_HAPLOTYPE_CALLER by feeding inputs in the correct order, using the channels we declared at the top of the file, and variables we set up in the global params object.

Running Our Workflow

Ensure you are in the haplotype_caller/ working directory, where nextflow.config and gatk_haplotype_caller.nf reside.

cd haplotype_caller

To run the workflow using our sample data, we can now write the following command:

nextflow run gatk_haplotype_caller.nf

Nextflow will automatically check if there is a nextflow.config file in the working directory, and if so will use that to configure itself. Our inputs are supplied in nextflow.config alongside the dsl2 & singularity config, so it should run without issue.

Once completed, we can check the outputs/ folder to view our results.

If everything went well, the outputs/ folder should contain 2 files:

output.g.vcf.gzoutput.g.vcf.gz.tbi

Conclusion¶

In this section we explored how to translate the gatk_haplotype_caller CWL CommandLineTool to a Nextflow process.

This is a more real-world situation, where the CommandLineTool has multiple inputs, secondary files, and optionality.

If needed, you can check the final/cwl/gatk_haplotype_caller/ folder inside our data/ directory which contains the files we created in this tutorial as reference.

Now that we have translated two CWL CommandLineTools, we will translate a full CWL Workflow into Nextflow.

Section 1.3: Align Sort Markdup Workflow (CWL)¶

Introduction¶

This section demonstrates translation of a CWL Workflow to Nextflow using janis translate.

The workflow used in this tutorial is taken from the McDonnell Genome Institute (MGI) analysis-workflows repository.

This resource stores publically available analysis pipelines for genomics data.

It is a fantastic piece of research software, and the authors thank MGI for their contribution to open-source research software.

The workflow using in this tutorial - align_sort_markdup.cwl - accepts multiple unaligned readsets as input and produces a single polished alignment bam file.

Main Inputs

- Unaligned reads stored across multiple BAM files

- Reference genome index

Main Outputs

- Single BAM file storing alignment for all readsets

Steps

- Read alignment (run in parallel across all readsets) -

bwa mem - Merging alignment BAM files to single file -

samtools merge - Sorting merged BAM by coordinate -

sambamba sort - Tagging duplicate reads in alignment -

picard MarkDuplicates - Indexing final BAM -

samtools index

Janis Translate¶

Previously we were translating single CWL CommandLineTools, but in this section we are translating a full CWL workflow.

To translate a workflow, we supply the main CWL workflow file to janis translate.

In addition to the main CWL Workflow, all Subworkflows / CommandLineTools will be detected & translated.

First, let’s make sure we’re back in the main training directory

cd /home2/training

In this section, we are translating CWL -> Nextflow, and our source CWL file is located at data/source/cwl/align_sort_markdup/subworkflows/align_sort_markdup.cwl relative to this document.

We will add the -o align_sort_markdup argument to specify this as the output directory.

To translate, run the following command:

singularity exec ~/janis.sif janis translate -o align_sort_markdup --from cwl --to nextflow data/source/cwl/align_sort_markdup/subworkflows/align_sort_markdup.cwl

Translation Output¶

The output translation will contain multiple files and directories.

You will see a folder called align_sort_markdup/ appear - inside this folder, we should see the following structure:

align_sort_markdup

├── main.nf # main workflow (align_sort_markdup)

├── modules # folder containing nextflow processes

│ ├── align_and_tag.nf

│ ├── index_bam.nf

│ ├── mark_duplicates_and_sort.nf

│ ├── merge_bams_samtools.nf

│ └── name_sort.nf

├── nextflow.config # config file to supply input information

├── subworkflows # folder containing nextflow subworkflows

│ └── align.nf

└── templates # folder containing any scripts used by processes

└── markduplicates_helper.sh

Now that we have performed translation using janis translate, we need to check the translated workflow for correctness.

From here, we will do a test-run of the workflow using sample data, and make manual adjustments to the translated workflow where needed.

Running the Translated Workflow¶

Inspect main.nf

The main workflow translation appears as main.nf in the align_sort_markdup/ folder.

This filename is just a convention, and we use it to provide clarity about the main entry point of the workflow.

In our case main.nf is equivalent to the main CWL Workflow file we translated: align_sort_markdup.cwl.

NOTE:

Before continuing, feel free to have a look at the other nextflow files which have been generated during translation:

Each CWL Subworkflow appears as a nextflowworkflowin thesubworkflows/directory.

Each CWL CommandLineTool appears as a nextflowprocessin themodules/directory.

In main.nf we see the nextflow workflows / processes called by the main workflow:

include { ALIGN } from './subworkflows/align'

include { INDEX_BAM } from './modules/index_bam'

include { MARK_DUPLICATES_AND_SORT } from './modules/mark_duplicates_and_sort'

include { MERGE_BAMS_SAMTOOLS as MERGE } from './modules/merge_bams_samtools'

include { NAME_SORT } from './modules/name_sort'

We also see that some nextflow Channels and a single variable have been set up.

These are used to supply data according to nextflow’s adoption of the dataflow programming model.

// data which will be passed as channels

ch_bams = Channel.fromPath( params.bams ).toList()

ch_readgroups = Channel.of( params.readgroups ).toList()

// data which will be passed as variables

reference = params.reference.collect{ file(it) }

Focusing on the channel declarations, we want to note a few things:

-

ch_bamsis analygous to the ‘bams’ input inalign_sort_markdup.cwl.

It declares a queue channel which expects the data supplied viaparams.bamsarepathtypes.

It then groups the bams together as a sole emission using.toList().

We will need to set upparams.bamsto supply this data. -

ch_readgroupsis analygous to the ‘readgroups’ input inalign_sort_markdup.cwl.

It is the same asch_bams, except it requiresvaltypes rather thanpathtypes.

We will need to set upparams.readgroupsto supply this data.

We also see a new variable called reference being created.

This reference var is analygous to the ‘reference’ input in align_sort_markdup.cwl.

It collects the ‘reference’ primary & secondary files together in a list.

We will need to set up params.reference to supply this data.

Note: Why does

referenceappear as a variable, rather than aChannel?

In Nextflow, we find it is best to create Channels for data that moves through the pipeline.

Thech_bamschannel is created because we want to consume / transform the input bams during the pipeline.

On the other hand, thereferencegenome variable is not something we really want to consume or transform.

It’s more like static helper data which can be used by processes, but it doesn’t move through our pipeline, and definitely shouldn’t be consumed or transformed in any way.

Note:

.toList()

Nextflow queue channels work differently to lists.

Instead of supplying all items together, queue channels emit each item separately.

This results in a separate task being spawned for each item in the queue when the channel is used.

As the CWL workflow input specifies thatbamsis a list, we use.toList()to group all items as a sole emission.

This mimics a CWL array which is the datatype of thebamsinputs.

As it turns out, the CWL workflow ends up running thealignstep in parallel across thebams&readgroupsinputs.

Parallelisation in nextflow happens by default.

To facilitate this, the.flatten()method is called onch_bamsandch_readgroupswhen used in theALIGNtask.

This emits items inch_bamsandch_readgroupsindividually, spawning a newALIGNtask for each pair.

We’re kinda doing redundant work by calling.toList(), then.flatten()whench_bamsandch_readgroupsare used.

janis translateisn’t smart enough yet to detect this yet, but may do so in future.

Continuing down main.nf, we see the main workflow {} has 5 tasks.

Each task has been supplied values according to the source workflow. Comments display the name of the process/workflow input which is being fed a particular value.

workflow {

ALIGN(

ch_bams.flatten(), // bam

ch_reference, // reference

ch_readgroups.flatten() // readgroup

)

INDEX_BAM(

MARK_DUPLICATES_AND_SORT.out.sorted_bam.map{ tuple -> tuple[0] } // bam

)

MARK_DUPLICATES_AND_SORT(

params.mark_duplicates_and_sort.script, // script

NAME_SORT.out.name_sorted_bam, // bam

params.NULL_VALUE, // input_sort_order

params.final_name // output_name

)

MERGE(

ALIGN.out.tagged_bam.toList(), // bams

params.final_name // name

)

NAME_SORT(

MERGE.out.merged_bam // bam

)

}

Before main.nf can be run, we will need to supply values for the params variables.

This is done in nextflow.config.

Inspect nextflow.config

To test the translated workflow, we will first set up workflow inputs in nextflow.config.

Before running a workflow, nextflow will attempt to open nextflow.config and read in config information and global param variables from this file.

We use this file to tell nextflow how to run and to supply workflow inputs.

Inside the align_sort_markdup/ folder you will see that nextflow.config is already provided.

Janis translate creates this file to provide clarity about the necessary workflow inputs, and to set some other config variables.

Open nextflow.config and have a look at the contents. It should look similar to the following:

nextflow.enable.dsl = 2

singularity.enabled = true

singularity.cacheDir = "$HOME/.singularity/cache"

params {

// Placeholder for null values.

// Do not alter unless you know what you are doing.

NULL_VALUE = 'NULL'

// WORKFLOW OUTPUT DIRECTORY

outdir = './outputs'

// INPUTS (MANDATORY)

bams = [] // (MANDATORY array) eg. [file1, ...]

reference = [] // (MANDATORY fastawithindexes) eg. [fasta, amb, ann, bwt, dict, fai, pac, sa]

readgroups = NULL_VALUE // (MANDATORY array) eg. [string1, ...]

// INPUTS (OPTIONAL)

final_name = "final.bam"

// PROCESS: ALIGN_AND_TAG

align_and_tag.cpus = 8

align_and_tag.memory = 20000

// PROCESS: INDEX_BAM

index_bam.memory = 4000

// PROCESS: MARK_DUPLICATES_AND_SORT

mark_duplicates_and_sort.script = "/home2/training/align_sort_markdup/templates/markduplicates_helper.sh"

mark_duplicates_and_sort.cpus = 8

mark_duplicates_and_sort.memory = 40000

// PROCESS: MERGE_BAMS_SAMTOOLS

merge_bams_samtools.cpus = 4

merge_bams_samtools.memory = 8000

// PROCESS: NAME_SORT

name_sort.cpus = 8

name_sort.memory = 26000

}

NOTE:

NULL_VALUE = 'NULL'

Nextflow doesn’t likenullvalues to be passed to process inputs.

This is a challenge for translation as other languages allowoptionalinputs.

To get around this, Janis Translate sets theparams.NULL_VALUEvariable as anullplaceholder forvaltype inputs.

You will see this being used in nextflow processes to do optionality checking.

The auto-generated nextflow.config splits up workflow inputs using some headings.

// INPUTS (MANDATORY)

// INPUTS (OPTIONAL)

// PROCESS: ALIGN_AND_TAG

May be mandatory or optional.

Setting up Workflow Inputs

Janis Translate will enter values for workflow inputs where possible. Others need to be manually supplied as they are specific to the input data you wish to use.

In our case, we need to supply values for those under the // INPUTS (MANDATORY) heading.

Specifically, we need to provide sample data for the bams, reference, and readgroups inputs.

Additionally, the cpu and memory params need to be changed.

The source CWL Workflow has compute requirements set greater than what is available on your VM.

Copy and paste the following text, to override the current nextflow.config file:

nextflow.enable.dsl = 2

singularity.enabled = true

singularity.cacheDir = "$HOME/.singularity/cache"

params {

// Placeholder for null values.

// Do not alter unless you know what you are doing.

NULL_VALUE = 'NULL'

// WORKFLOW OUTPUT DIRECTORY

outdir = './outputs'

// INPUTS (MANDATORY)

bams = [

"/home2/training/data/sample_data/cwl/2895499223.bam",

"/home2/training/data/sample_data/cwl/2895499237.bam",

]

reference = [

"/home2/training/data/sample_data/cwl/chr17_test.fa",

"/home2/training/data/sample_data/cwl/chr17_test.fa.amb",

"/home2/training/data/sample_data/cwl/chr17_test.fa.ann",

"/home2/training/data/sample_data/cwl/chr17_test.fa.bwt",

"/home2/training/data/sample_data/cwl/chr17_test.fa.fai",

"/home2/training/data/sample_data/cwl/chr17_test.dict",

"/home2/training/data/sample_data/cwl/chr17_test.fa.pac",

"/home2/training/data/sample_data/cwl/chr17_test.fa.sa",

]

readgroups = [

'@RG\tID:2895499223\tPU:H7HY2CCXX.3.ATCACGGT\tSM:H_NJ-HCC1395-HCC1395\tLB:H_NJ-HCC1395-HCC1395-lg24-lib1\tPL:Illumina\tCN:WUGSC',

'@RG\tID:2895499237\tPU:H7HY2CCXX.4.ATCACGGT\tSM:H_NJ-HCC1395-HCC1395\tLB:H_NJ-HCC1395-HCC1395-lg24-lib1\tPL:Illumina\tCN:WUGSC'

]

// INPUTS (OPTIONAL)

final_name = "final.bam"

// PROCESS: ALIGN_AND_TAG

align_and_tag.cpus = 4

align_and_tag.memory = 10000

// PROCESS: INDEX_BAM

index_bam.memory = 10000

// PROCESS: MARK_DUPLICATES_AND_SORT

mark_duplicates_and_sort.script = "/home2/training/align_sort_markdup/templates/markduplicates_helper.sh"

mark_duplicates_and_sort.cpus = 4

mark_duplicates_and_sort.memory = 10000

// PROCESS: MERGE_BAMS_SAMTOOLS

merge_bams_samtools.cpus = 4

merge_bams_samtools.memory = 10000

// PROCESS: NAME_SORT

name_sort.cpus = 4

name_sort.memory = 10000

}

Run the Workflow

Ensure you are in the align_sort_markdup/ working directory, where nextflow.config and main.nf reside.

If not, use the following to change directory.

cd align_sort_markdup

To run the workflow using our sample data, we can now write the following command:

nextflow run main.nf

While the workflow runs, you will encounter this error:

Access to 'MARK_DUPLICATES_AND_SORT.out' is undefined since the process 'MARK_DUPLICATES_AND_SORT' has not been invoked before accessing the output attribute

This is somewhat expected. Janis translate doesn’t produce perfect translations - just the best it can do.

This is the first of 3 errors we will encounter and fix while making this workflow runnable.

Manual Adjustments¶

Translations performed by janis translate often require manual changes due to the difficulty of translating between languages with non-overlapping feature sets.

In this section we will fix 3 errors to bring the translation to a finished state.

NOTE

If having trouble during this section, the finished workflow is available in thedata/final/cwl/align_sort_markdupfolder for reference.

Error 1: Process Order¶

Error message

The first issue we need to address is caused by tasks being in the wrong order.

Access to 'MARK_DUPLICATES_AND_SORT.out' is undefined since the process 'MARK_DUPLICATES_AND_SORT' has not been invoked before accessing the output attribute

This nextflow error message is quite informative, and tells us that a task is trying to access the output of MARK_DUPLICATES_AND_SORT before it has run.

The offending task is INDEX_BAM as it uses MARK_DUPLICATES_AND_SORT.out.sorted_bam.map{ tuple -> tuple[0] } as an input value to the process.

Troubleshooting

It seems that a nextflow process is trying to run before a prior process has finished.

This is most likely due to the processes in our main.nf workflow being in the wrong order.

Let’s look at the source CWL align_sort_markdup.cwl workflow to view the correct order of steps:

steps:

align:

...

merge:

...

name_sort:

...

mark_duplicates_and_sort:

...

index_bam:

...

This differs from our translated main.nf which has the following order:

workflow {

ALIGN(

...

)

INDEX_BAM(

...

)

MARK_DUPLICATES_AND_SORT(

...

)

MERGE(

...

)

NAME_SORT(

...

)

}

Solution

We will need to rearrange the process calls in main.nf so they mirror the order seen in the source CWL.

Rerranging process calls

Cut-and-paste the process calls to be in the correct order in the main workflow.

Correct Order

- ALIGN

- MERGE

- NAME_SORT

- MARK_DUPLICATES_AND_SORT

- INDEX_BAM

Show Workflow

workflow {

ALIGN(

ch_bams.flatten(), // ch_bam

reference, // ch_reference

ch_readgroups.flatten() // ch_readgroup

)

MERGE(

ALIGN.out.tagged_bam.toList(), // bams

params.final_name // name

)

NAME_SORT(

MERGE.out.merged_bam // bam

)

MARK_DUPLICATES_AND_SORT(

params.mark_duplicates_and_sort.script, // script

NAME_SORT.out.name_sorted_bam, // bam

params.NULL_VALUE, // input_sort_order

params.final_name // output_name

)

INDEX_BAM(

MARK_DUPLICATES_AND_SORT.out.sorted_bam.map{ tuple -> tuple[0] } // bam

)

}

After you are done, rerun the workflow by using the same command as before.

nextflow run main.nf

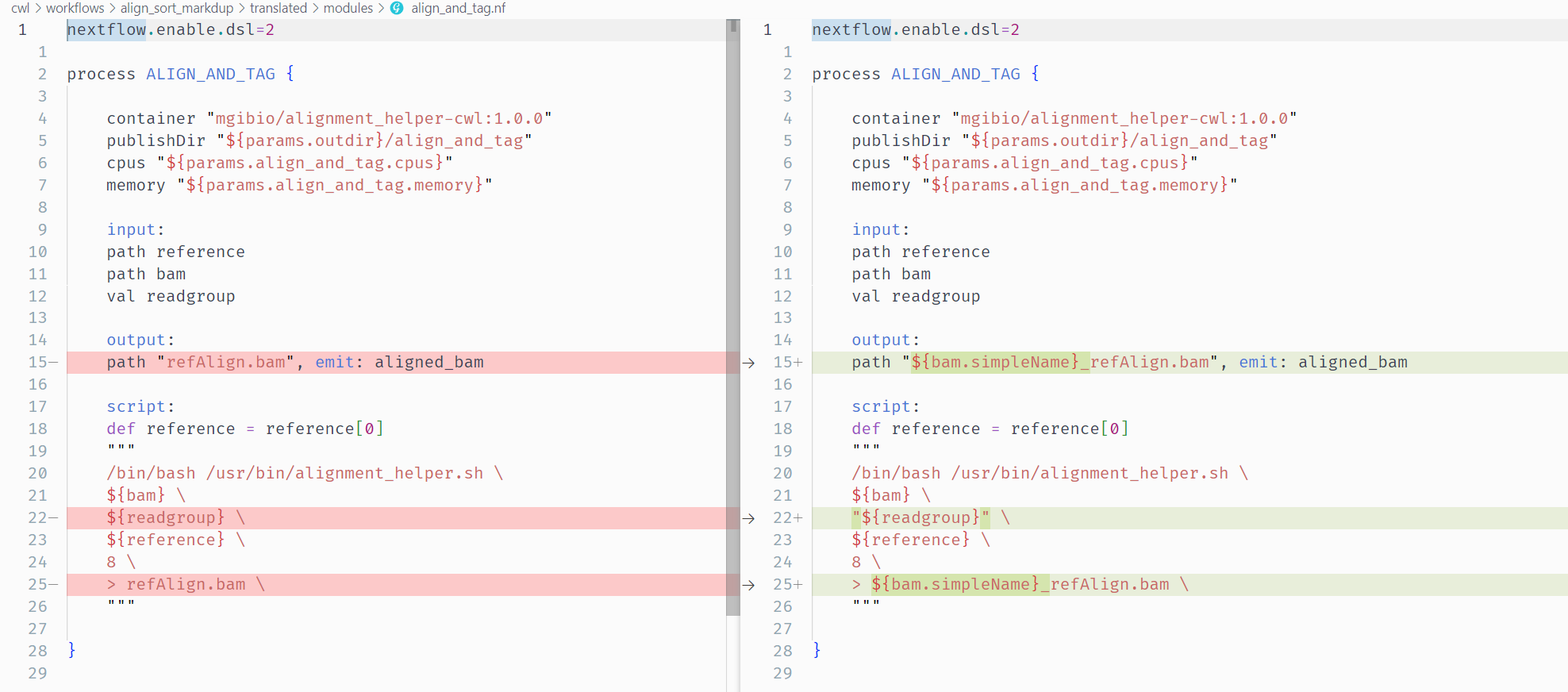

Error 2: Unquoted Strings¶

Error message

The second error is due to the readgroup input of the ALIGN_AND_TAG process being used without enclosing quotes.

Caused by:

Process `ALIGN:ALIGN_AND_TAG (1)` terminated with an error exit status (1)

Command executed:

/bin/bash /usr/bin/alignment_helper.sh 2895499223.bam @RG INCLID:2895499223RY_PU:H7HY2CCXX.3.ATCACGGTITY=ISM:H_NJ-HCC1395-HCC1395ILB:H_NJ-HCC1395-HCC1395-lg24-lib1LEVEL=5PL:IlluminaSCN:WUGSC chr17_test.fa 8 > refAlign.bam

The issue can be seen in the command above.

We see /bin/bash /usr/bin/alignment_helper.sh 2895499223.bam which is expected.

This is then followed by what looks like 2 string arguments: @RG and INCLID....

This is causing the problem.

Troubleshooting

The Command executed section in nextflow error messages is particularly useful.

This message is printed to the shell, but can also be seen by navigating to the process working directory & viewing .command.sh.

Let’s look at the nextflow ALIGN_AND_TAG process so we can match up which process input is causing the error.

Indiviual arguments have been marked with their (position) to aid our investigation.

process ALIGN_AND_TAG {

container "mgibio/alignment_helper-cwl:1.0.0"

publishDir "${params.outdir}/align_and_tag"

cpus "${params.align_and_tag.cpus}"

memory "${params.align_and_tag.memory}"

input:

path reference

path bam

val readgroup

output:

path "refAlign.bam", emit: aligned_bam

script:

def reference = reference[0]

"""

(1) /bin/bash (2) /usr/bin/alignment_helper.sh \

(3) ${bam} \

(4) ${readgroup} \

(5) ${reference} \

(6) 8 \

(7) > (8) refAlign.bam

"""

}

Here is the command executed with the same numbering:

(1) /bin/bash (2) /usr/bin/alignment_helper.sh (3) 2895499223.bam (4) @RG (5) ID:2895499223 PU:H7HY2CCXX.3.ATCACGGT SM:H_NJ-HCC1395-HCC1395 LB:H_NJ-HCC1395-HCC1395-lg24-lib1 PL:Illumina CN:WUGSC (6) chr17_test.fa (7) 8 (8) > (9) refAlign.bam

Matching up the two, we can see that arguments (1-3) match their expected values.

Argument (4) starts out right, as we expect the readgroup input.

Looking in the script: section of the nextflow process, we expect the ${reference} input to appear as argument (5), but in the actual command it appears as argument (6) chr17_test.fa.

The issue seems to be that the readgroup input has been split into 2 strings, instead of 1 single string.

By tracing back through the workflow, we can track that params.readgroups supplies the value for readgroup in this nextflow process:

modules/align_and_tag.nf: readgroup

subworkflows/align.nf: ch_readgroup

main.nf: ch_readgroups.flatten()

main.nf: ch_readgroups = Channel.of( params.readgroups ).toList()

Looking at nextflow.config we see that this particular readgroup value is supplied as follows:

@RG\tID:2895499223\tPU:H7HY2CCXX.3.ATCACGGT\tSM:H_NJ-HCC1395-HCC1395\tLB:H_NJ-HCC1395-HCC1395-lg24-lib1\tPL:Illumina\tCN:WUGSC

The @RG being split from the rest of the readgroup value, and the text which follows is incorrect in the command.

The issue here is that this value in params.readgroups contains spaces and tabs (\t).

When used in a nextflow process, string arugments should be enclosed using "".

Solution

Back in the modules/align_and_tag.nf file, let’s properly enclose the readgroup input in quotes.

align_and_tag.nf

In the ALIGN_AND_TAG process script enclose the ${readgroup} reference in quotes.

Show Change

script:

def reference = reference[0]

"""

/bin/bash /usr/bin/alignment_helper.sh \

${bam} \

"${readgroup}" \ <- quotes added

${reference} \

8 \

> refAlign.bam

"""

After you are have made the change, re-run the workflow by using the same command as before:

nextflow run main.nf

Error 3: Filename Clashes¶

Error Message

The final error is flagged by the nextflow workflow engine.

Upon re-running the workflow, you will encounter the following message:

Process `MERGE` input file name collision -- There are multiple input files for each of the following file names: refAlign.bam

This informs us that more than 1 file with the name refAlign.bam have been inputs to MERGE process.

Nextflow does not allow this behaviour.

Other workflow engines use temporary names for files so that name clashes are impossible.

Nextflow requires us to be more specific with our filenames so we can track which file is which.

This is not a hard-and-fast rule of workflow engines, but nextflow enforces using unique names to encourage best-practises.

Troubleshooting

To track the cause, let’s look at the data which feeds the MERGE process.

main.nf

MERGE(

ALIGN.out.tagged_bam.toList(), // bams

params.final_name // name

)

We can see the translated nextflow workflow is collecting the tagged_bam output of all ALIGN tasks as a list using .toList().

This value feeds the bams input of the MERGE process.

The issue is that 2+ files in this list must have the same filename: “refAlign.bam”.

We need to see how files end up being placed into ALIGN.out.tagged_bam to track where the error is occuring.

Looking in the ALIGN subworkflow in subworkflows/align.nf, we see the offending tagged_bam output emit.

This gets its data from the aligned_bam output of the ALIGN_AND_TAG process in modules/align_and_tag.nf.

The source of the issue must be that the aligned_bam output is producing the same filename each time the task is run.

Opening modules/align_and_tag.nf we can see how the align_bam output is created & collected:

output:

path "refAlign.bam", emit: aligned_bam <-

script:

def reference = reference[0]

"""

/bin/bash /usr/bin/alignment_helper.sh \

${bam} \

"${readgroup}" \

${reference} \

8 \

> refAlign.bam <-

"""

From looking at the output and script section of this process, we can see that the aligned_bam output will always have the same filename.

In the script, we generate a file called refAlign.bam by redirecting stdout to this file.

In the output, refAlign.bam is collected as the output.

This would be fine if our workflow was supplied only a single BAM input file, but we want our workflow to run when we have many BAMs.

Solution

To fix this issue, we need to give the output files unique names.

This can be accomplished using a combination of the path bam process input, and the .simpleName operator.

As our input BAM files will all have unique names, we can use their name as a base for our output alignemnts. If we were to use their filename directly, we would get their file extension too, which we don’t want!

Luckily the .simpleName nextflow operator will return the basename of a file without directory path or extensions.

For example, imagine we have a process input path reads.

Suppose it gets fed the value "data/SRR1234.fq" at runtime.

Calling ${reads.simpleName} in the process script would yield "SRR1234".

Note that directory path and the extension have been trimmed out!

align_and_tag.nf

Script section:

Use .simpleName to generate an output filename based on the path bam process input.

Add _refAlign.bam onto the end of this filename for clarity.

Output section:

Alter the collection expression for the aligned_bam output to collect this file.

Show Change

process ALIGN_AND_TAG {

container "mgibio/alignment_helper-cwl:1.0.0"

publishDir "${params.outdir}/align_and_tag"

cpus "${params.align_and_tag.cpus}"

memory "${params.align_and_tag.memory}"

input:

path reference

path bam

val readgroup

output:

path "${bam.simpleName}_refAlign.bam", emit: aligned_bam <-

script:

def reference = reference[0]

"""

/bin/bash /usr/bin/alignment_helper.sh \

${bam} \

"${readgroup}" \

${reference} \

8 \

> ${bam.simpleName}_refAlign.bam <-

"""

}

After you are have made these changes, re-run the workflow by using the same command as before:

nextflow run main.nf

With any luck, this will fix the remaining issues and the workflow will now run to completion.

Completed Workflow¶

Once completed, we can check the outputs/ folder to view our results.

If everything went well, the outputs/ folder should have the following structure:

outputs

├── align_and_tag

│ ├── 2895499223_refAlign.bam

│ └── 2895499237_refAlign.bam

├── index_bam

│ ├── final.bam

│ └── final.bam.bai

├── mark_duplicates_and_sort

│ ├── final.bam

│ ├── final.bam.bai

│ └── final.mark_dups_metrics.txt

├── merge_bams_samtools

│ └── final.bam.merged.bam

└── name_sort

└── final.NameSorted.bam

If having trouble, the finished workflow is also available in the data/final/cwl/align_sort_markdup/ folder for reference.

In addition, the following diffs shows the changes we made to files during manual adjustment of the workflow.

main.nf

align_and_tag.nf

Conclusion¶

In this section we explored how to translate the align_sort_markdup CWL Workflow to Nextflow using janis translate.

This is the end of section 1.

Let’s take a break!

In section 2 we will be working with Galaxy, and will translate some Galaxy Tool Wrappers and Workflows into Nextflow as seen with CWL.

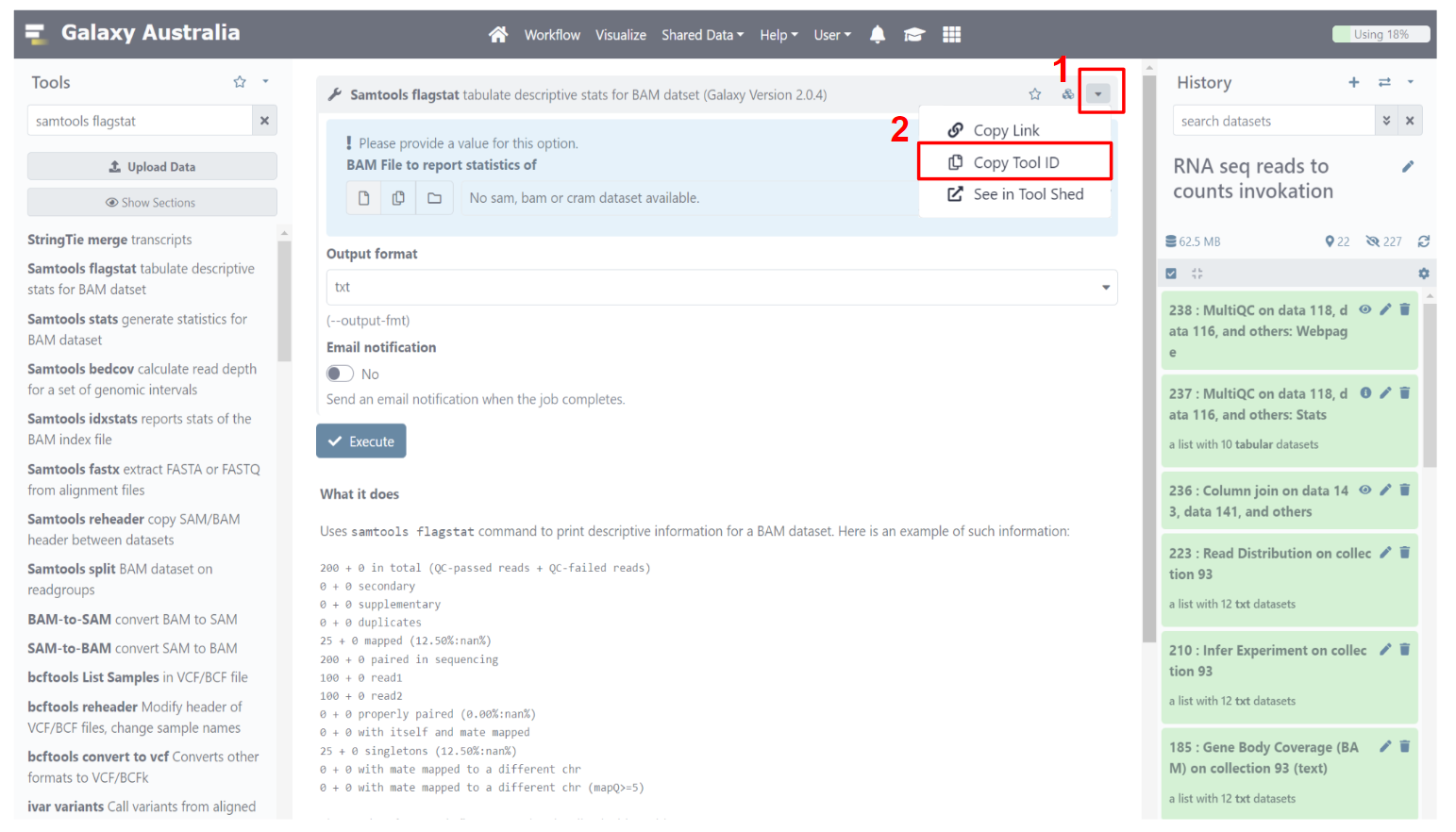

Section 2.1: Samtools Flagstat Tool (Galaxy)¶

Introduction¶

This section demonstrates translation of a basic samtools flagstat Galaxy Tool Wrapper to Nextflow using janis translate.

The Galaxy Tool Wrapper used in this section was created by contributors to the Galaxy Devteam repository of tools.

The underlying software used in the Galaxy Tool Wrapper - samtools_flagstat - displays summary information for an alignment file.

Janis Translate¶

As in the previous sections we will use janis-translate.

This time instead of CWL -> Nextflow, we will do a Galaxy -> Nextflow translation.

Aside from local filepaths, janis translate can also access Galaxy Tool Wrappers using a tool ID.

We will use this method today as it is an easier way to access Tool Wrappers.

To get the samtools_flagstat Tool ID, navigate to the tool using any usegalaxy.org server.

The following is a link to the samtools flagstat tool in Galaxy Australia:

https://usegalaxy.org.au/root?tool_id=toolshed.g2.bx.psu.edu/repos/devteam/samtools_flagstat/samtools_flagstat/2.0.4.

Once here, we will copy the Tool ID.

At time of writing, the current Tool ID for the samtools_flagstat tool wrapper is toolshed.g2.bx.psu.edu/repos/devteam/samtools_flagstat/samtools_flagstat/2.0.4

Now we have the Tool ID, we can access & translate this Galaxy Tool Wrapper to a Nextflow process.

We will add -o samtools_flagstat to our command to set the output directory.

First, let’s make sure we’re in the training directory

cd /home2/training

To translate the Galaxy Tool, run the following command:

singularity exec ~/janis.sif janis translate -o samtools_flagstat --from galaxy --to nextflow toolshed.g2.bx.psu.edu/repos/devteam/samtools_flagstat/samtools_flagstat/2.0.4

Once complete, you will see a folder called samtools_flagstat/ appear, and a nextflow process called samtools_flagstat.nf will be present inside.

For your own reference / interest, the actual Galaxy Tool Wrapper files will be downloaded during translation & will be presented to you in samtools_flagstat/source/.

Manual Adjustments¶

The samtools_flagstat/samtools_flagstat.nf file should be similar to the following:

nextflow.enable.dsl=2

process SAMTOOLS_FLAGSTAT {

container "quay.io/biocontainers/samtools:1.13--h8c37831_0"

input:

path input1

val addthreads

output:

path "output1.txt", emit: output1

script:

"""

samtools flagstat \

--output-fmt "txt" \

-@ ${addthreads} \

${input1} \

> output1.txt

"""

}

We can see that this nextflow process has two inputs, a single output, and calls samtools flagstat.

Before continuing, let’s check the samtools flagstat documentation. In the documentation, we see the following:

samtools flagstat in.sam|in.bam|in.cram

-@ INT

Set number of additional threads to use when reading the file.

--output-fmt/-O FORMAT

Set the output format. FORMAT can be set to `default', `json' or `tsv' to select the default, JSON or tab-separated values output format. If this option is not used, the default format will be selected.

By matching up the process inputs: section and the script: section, we can see that:

- path input1 will be the input sam | bam | cram

- val addthreads will be the threads argument passed to -@

- the --output-fmt option has been assigned the default value of "txt"

We can also see that a container image is available for this tool.

This translation is correct for the samtools_flagstat.cwl file and needs no adjusting.

Note:

If you would like to expose the--output-fmtoption as a process input, you can do the following:

- add a

val formatinput to the process- reference this input in the script, replacing the hardcoded

"txt"value

(e.g.--output-fmt ${format})

Running Samtools Flagstat as a Workflow¶

Setting up nextflow.config

To run this process, we will set up a nextflow.config file and add some lines to the top of our process definition to turn it into a workflow.

Create a new file called nextflow.config in the samtools_flagstat/ folder alongside samtools_flagstat.nf.

Copy and paste the following code into your nextflow.config file:

nextflow.enable.dsl = 2

singularity.enabled = true

singularity.cacheDir = "$HOME/.singularity/cache"

params {

bam = "/home2/training/data/sample_data/galaxy/samtools_flagstat/samtools_flagstat_input1.bam"

threads = 1

}

This tells nextflow how to run, and sets up inputs parameters we can use to supply values to the SAMTOOLS_FLAGSTAT process:

- The

bamparameter is the input bam file we wish to analyse. - The

threadsparameter is an integer, and controls how many additional compute threads to use during runtime.

From here, we can refer to these inputs as params.bam / params.threads in other files.

Creating Workflow & Passing Data

Now that we have the nextflow.config file set up, we will add a few lines to samtools_flagstat.nf to turn it into a workflow.

Copy and paste the following lines at the top of samtools_flagstat.nf:

nextflow.enable.dsl=2

ch_bam = Channel.fromPath( params.bam )

workflow {

SAMTOOLS_FLAGSTAT(

ch_bam, // input1

params.threads // addthreads

)

SAMTOOLS_FLAGSTAT.out.output1.view()

}

The first line creates a nextflow Channel for our bam input.

The params.bam global variable we set up previously is used to supply the

path to our sample data.

The new workflow {} section declares the main workflow entry point.

When we run this file, nextflow will look for this section and run the workflow contained within.

In our case, the workflow only contains a single task, which runs the SAMTOOLS_FLAGSTAT process defined below the workflow section. We then supply input values to SAMTOOLS_FLAGSTAT using our ch_bams channel we created for input1, and params.threads for the addthreads input.

Adding publishDir directive

So that we can collect the output of SAMTOOLS_FLAGSTAT when it runs, we will add a publishDir directive to the process:

process SAMTOOLS_FLAGSTAT {

container "quay.io/biocontainers/samtools:1.13--h8c37831_0"

publishDir "./outputs"

...

}

Now that we have set up SAMTOOLS_FLAGSTAT as a workflow, we can run it and check the output.

Running Our Workflow

Ensure you are in the samtools_flagstat/ working directory, where nextflow.config and samtools_flagstat.nf reside.

cd samtools_flagstat

To run the workflow using our sample data, we can now write the following command:

nextflow run samtools_flagstat.nf

Once completed, the check the ./outputs folder inside samtools_flagstat/.

If everything went well, you should see a single file called output1.txt with the following contents:

200 + 0 in total (QC-passed reads + QC-failed reads)

200 + 0 primary

0 + 0 secondary

0 + 0 supplementary

0 + 0 duplicates

0 + 0 primary duplicates

25 + 0 mapped (12.50% : N/A)

25 + 0 primary mapped (12.50% : N/A)

200 + 0 paired in sequencing

100 + 0 read1

100 + 0 read2

0 + 0 properly paired (0.00% : N/A)

0 + 0 with itself and mate mapped

25 + 0 singletons (12.50% : N/A)

0 + 0 with mate mapped to a different chr

0 + 0 with mate mapped to a different chr (mapQ>=5)

If needed, you can check the data/final/galaxy/samtools_flagstat folder as a reference.

Conclusion¶

In this section we explored how to translate a simple Galaxy Tool to a Nextflow process.

Will build on this in the next section where we translate a much more complex Galaxy Tool Wrapper: limma.

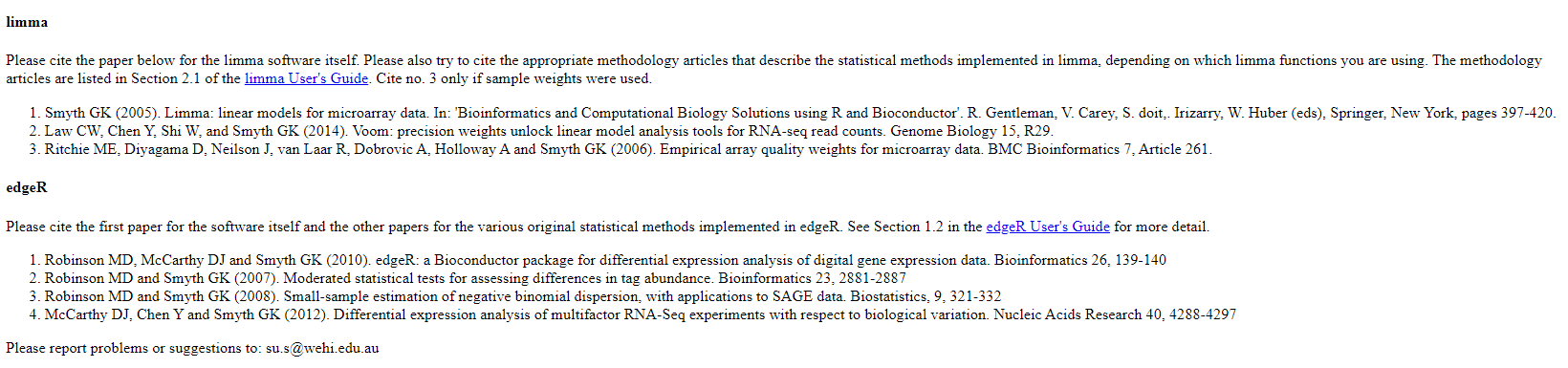

Section 2.2: Limma Voom Tool (Galaxy)¶

Introduction¶

This section demonstrates translation of the limma tool from Galaxy to Nextflow using janis translate.

The aim of this section is to document a more challenging translation, as this Galaxy Tool Wrapper is complex and uses an Rscript to run its analysis.

Shian Su is the original author of the limma Galaxy tool wrapper we are translating today.

Maria Doyle has also contributed multiple times over its many years of use as maintainence and upgrades.

Limma is an R package which analyses gene expression using microarray or RNA-Seq data.

The most common use (at time of writing) is referred to as limma-voom, which performs Differential Expression (DE) analysis on RNA-Seq data between multiple samples.

The voom part of limma-voom is functionality within limma which adapts limma to use RNA-Seq data instead of microarray data.

Since RNA-Seq data has become more common than microarray data at time of writing, limma-voom is likely the most popular use of the limma package as a workflow.

Under the hood, the Galaxy limma tool contains an R script which configures & runs limma based on command-line inputs.

This allows Galaxy users to run limma as a complete workflow, rather than as individual R library functions.

The Limma package has had many contributors over the years, including but not limited to:

- Gordon K. Smyth

- Matthew Ritchie

- Natalie Thorne

- James Wettenhall

- Wei Shi

- Yifang Hu

See the following articles related to Limma:

-

Limma package capabilities (new and old)

Ritchie, ME, Phipson, B, Wu, D, Hu, Y, Law, CW, Shi, W, and Smyth, GK (2015). limma powers differential expression analyses for RNA-sequencing and microarray studies. Nucleic Acids Research 43(7), e47. -

Limma for DE analysis

Phipson, B, Lee, S, Majewski, IJ, Alexander, WS, and Smyth, GK (2016). Robust hyperparameter estimation protects against hypervariable genes and improves power to detect differential expression. Annals of Applied Statistics 10(2), 946–963. -

Limma-Voom for DE analysis of RNA-Seq data

Law, CW, Chen, Y, Shi, W, and Smyth, GK (2014). Voom: precision weights unlock linear model analysis tools for RNA-seq read counts. Genome Biology 15, R29.

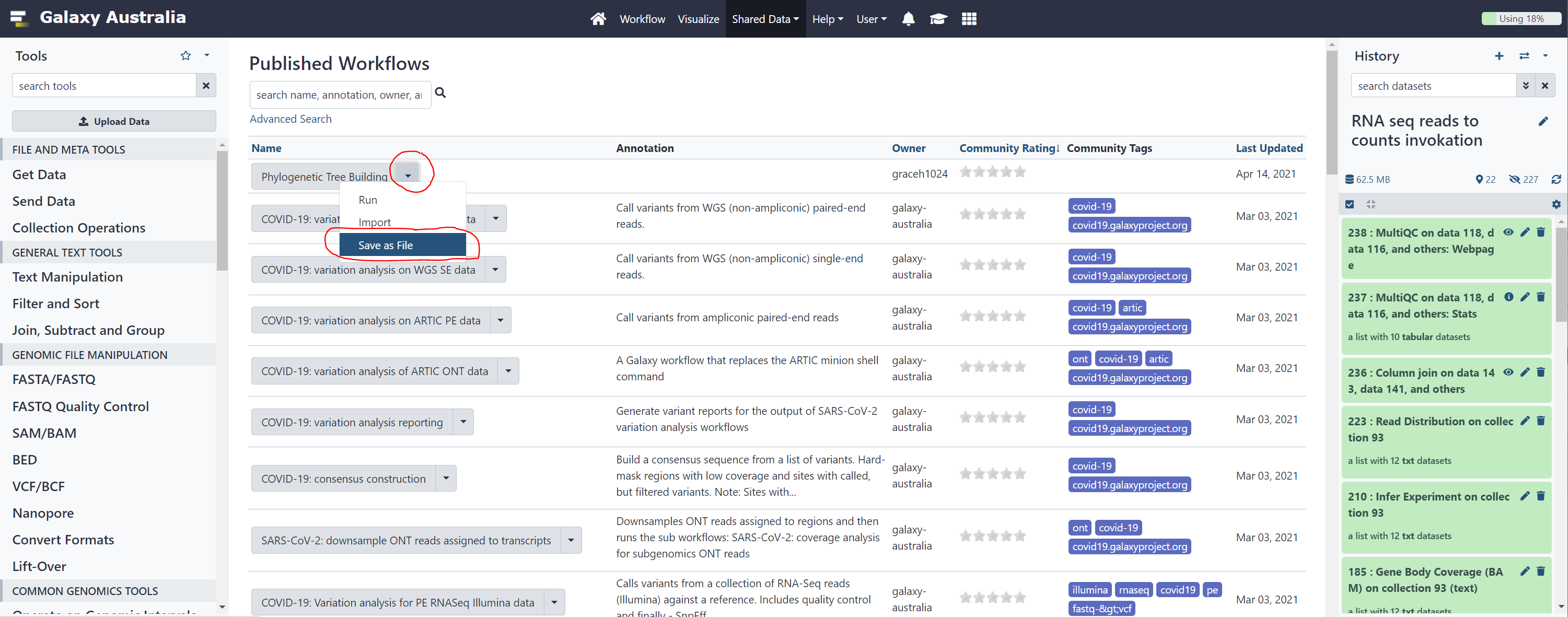

Janis Translate¶

We will run janis-translate in the same manner as section 2.1.

To get the Tool ID you can either:

- Go to the Galaxy Australia server & copy the Tool ID from the limma tool, or

- Copy the following ID: toolshed.g2.bx.psu.edu/repos/iuc/limma_voom/limma_voom/3.50.1+galaxy0

We add -o limma_voom to our command to specify this as the output directory.

As always, let’s make sure we’re back in the training folder:

cd /home2/training

To translate, run the following command:

singularity exec ~/janis.sif janis translate -o limma_voom --from galaxy --to nextflow toolshed.g2.bx.psu.edu/repos/iuc/limma_voom/limma_voom/3.50.1+galaxy0

Once complete, you will see a folder called limma_voom appear, and a nextflow process called limma_voom.nf will be present inside.

For your own reference / interest, the actual Galaxy Tool Wrapper files will be downloaded during translation & presented to you in limma_voom/source.

Manual Adjustments¶

Inside the limma_voom/ folder, we see the following:

- A Nextflow process named

limma_voom.nf - An Rscript named

limma_voom.R - A folder named

source/containing the Galaxy Tool Wrapper XML

Unlike previous tool translations, the limma_voom.nf process will need adjusting.

limma_voom.nf

The limma_voom/limma_voom.nf file is our nextflow process. It should be similar to the following:

nextflow.enable.dsl = 2

process LIMMA_VOOM {

container "quay.io/biocontainers/quay.io/biocontainers/bioconductor-limma:3.50.1--r41h5c21468_0"

input:

path anno_geneanno

path cont_cinfo

path input_counts

path input_fact_finfo

path limma_voom_script

path out_report1

val out_report_files_path

output:

path "output_dir/*_filtcounts", emit: outFilt

path "output_dir/*_normcounts", emit: outNorm

path "outReport.html", emit: outReport2

path "output_dir/*.tsv?", emit: outTables

path "libsizeinfo", emit: out_libinfo

path "unknown_collection_pattern", emit: out_rscript

script:

"""

Rscript \

${limma_voom_script} \

-C ${cont_cinfo} \

-R ${out_report1} \

-a ${anno_geneanno} \

-f ${input_fact_finfo} \

-m ${input_counts} \

-G 10 \

-P "i" \

-c 1 \

-d "BH" \

-j "" \

-l 0 \

-n "TMM" \

-o ${out_report_files_path} \

-p 0.05 \

-s 0 \

-t 3 \

-z 0

"""

}

We see that this nextflow process has multiple inputs, many command line arguments, and multiple outputs.

Look at the command line arguments for this process in the script: block.

This Galaxy Tool Wrapper is evidently running an Rscript which we supply to the process via the path limma_voom_script input.

In the script section we see that this Rscript will be run, and has some CLI arguments which follow.

When translating Galaxy Tool Wrappers, we often see this usage of scripts.

limma is an R library, so we need an Rscript to run the analysis we want.

The Galaxy Tool Wrapper supplies user inputs to this script, then the script will run the analysis.

NOTE

janis translatewill copy across any scripts referenced by a source Tool / Workflow.

Thelimma_voom/limma_voom.Rfile is the Rscript which this Galaxy Tool Wrapper uses to run an analysis.

limma_voom.R

From reading the nextflow process, it isn’t particularly obvious what each command line argument does.

We know that the process input are supplied to the limma_voom.R script, but the names aren’t very descriptive.

Luckily, the author of this script has good documentation at the top of the script to help us out.

Open limma_voom.R.

At the top of the file, we see some documentation:

# This tool takes in a matrix of feature counts as well as gene annotations and

# outputs a table of top expressions as well as various plots for differential

# expression analysis

#

# ARGS: htmlPath", "R", 1, "character" -Path to html file linking to other outputs

# outPath", "o", 1, "character" -Path to folder to write all output to

# filesPath", "j", 2, "character" -JSON list object if multiple files input

# matrixPath", "m", 2, "character" -Path to count matrix

# factFile", "f", 2, "character" -Path to factor information file

# factInput", "i", 2, "character" -String containing factors if manually input

# annoPath", "a", 2, "character" -Path to input containing gene annotations

# contrastFile", "C", 1, "character" -Path to contrasts information file

# contrastInput", "D", 1, "character" -String containing contrasts of interest

# cpmReq", "c", 2, "double" -Float specifying cpm requirement

# cntReq", "z", 2, "integer" -Integer specifying minimum total count requirement

# sampleReq", "s", 2, "integer" -Integer specifying cpm requirement

# normCounts", "x", 0, "logical" -String specifying if normalised counts should be output

# rdaOpt", "r", 0, "logical" -String specifying if RData should be output

# lfcReq", "l", 1, "double" -Float specifying the log-fold-change requirement

# pValReq", "p", 1, "double" -Float specifying the p-value requirement

# pAdjOpt", "d", 1, "character" -String specifying the p-value adjustment method

# normOpt", "n", 1, "character" -String specifying type of normalisation used

# robOpt", "b", 0, "logical" -String specifying if robust options should be used

# trend", "t", 1, "double" -Float for prior.count if limma-trend is used instead of voom

# weightOpt", "w", 0, "logical" -String specifying if voomWithQualityWeights should be used

# topgenes", "G", 1, "integer" -Integer specifying no. of genes to highlight in volcano and heatmap

# treatOpt", "T", 0, "logical" -String specifying if TREAT function should be used

# plots, "P", 1, "character" -String specifying additional plots to be created

#

# OUT:

# Density Plots (if filtering)

# Box Plots (if normalising)

# MDS Plot

# Voom/SA plot

# MD Plot

# Volcano Plot

# Heatmap

# Expression Table

# HTML file linking to the ouputs

# Optional:

# Normalised counts Table

# RData file

#

#

# Author: Shian Su - registertonysu@gmail.com - Jan 2014

# Modified by: Maria Doyle - Jun 2017, Jan 2018, May 2018

For each process input, find the command line argument it feeds, then look up the argument documentation in limma_voom.R.

For example, the anno_geneanno process input feeds the -a argument.

Looking at the documentation, we see that this is the gene annotations file.

# limma_voom.nf

-a ${anno_geneanno} \

# limma_voom.R

annoPath", "a", 2, "character" -Path to input containing gene annotations

cont_cinfo process input feeds the -C argument, which is a file containing contrasts of interest:

# limma_voom.nf

-C ${cont_cinfo} \

# limma_voom.R

contrastFile", "C", 1, "character" -Path to contrasts information file

Now that we have had a little look at how the LIMMA_VOOM nextflow process will run,

let’s make some adjustments so that it functions as intended.

Modifying Container

The first thing to do is change the container requirement.

Galaxy uses conda to handle tool requirements.

For Galaxy Tool Wrappers which only have a single requirement, janis-translate will just fetch a container for that single requirement by looking up registries on quay.io. For those which have 2+ requirements, things get complicated.

For the limma Galaxy Tool Wrapper, the following are listed:

- bioconductor-limma==3.50.1

- bioconductor-edger==3.36.0

- r-statmod==1.4.36

- r-scales==1.1.1

- r-rjson==0.2.21

- r-getopt==1.20.3

- r-gplots==3.1.1

- bioconductor-glimma==2.4.0

For best-practises pipelines, we want to use containers instead of conda, and want a single image.

To facilitate this, janis-translate has a new test-feature: --build-galaxy-tool-images

This feature allows janis-translate to build a single container image during runtime which has all software requirements.

As this feature requires docker (which is not available on our training VMs today), and also because containers can take anywhere from 2-15 minutes to build, we won’t use this feature today.

Instead, we have pre-built images for the relevant tools using janis-translate, and placed them on a quay.io repository.

Swapping container directive

Change the LIMMA_VOOM process container directive from:

quay.io/biocontainers/bioconductor-limma:3.50.1--r41h5c21468_0

To:

quay.io/grace_hall1/limma-voom:3.50.1

Show Change

process LIMMA_VOOM {

container "quay.io/grace_hall1/limma-voom:3.50.1" <-

publishDir "./outputs"

input:

path anno_geneanno

path cont_cinfo

path input_counts

path input_fact_finfo

path limma_voom_script

path out_report1

val out_report_files_path

...

}

Add publishDir Directive

While we’re modifying directives, let’s add a publishDir directive.

This lets us specify a folder where outputs of this process should be presented.

Adding publishDir Directive

Add a publishDir directive for the process with the path "./outputs".

Show Change

process LIMMA_VOOM {

container "quay.io/biocontainers/janis-translate-limma-voom-3.34.9.9"

publishDir "./outputs" <-

...

}

Modifying Inputs

The path out_report1 process input specifies the name of a html file which will present our results.

We aren’t actually supplying a file; we are providing the script a filename, which should be a string.

Modifying inputs: html path

Change the type of the out_report1 input from path to val.

Show Change

process LIMMA_VOOM {

container "quay.io/biocontainers/janis-translate-limma-voom-3.34.9.9"

publishDir "./outputs"

input:

path anno_geneanno

path cont_cinfo

path input_counts

path input_fact_finfo

path limma_voom_script

val out_report1 <-

val out_report_files_path

...

}

Modifying Script

From reading the documentation, you may have noticed that one particular argument isn’t needed.

The -j argument is only needed when we have multiple input files - but in our case we are using a single counts file.

We will be using a single input counts file, so can remove this argument.

Modifying script: -j argument

Remove the -j argument and its value from the script section.

Show Change

process LIMMA_VOOM {

...

script:

"""

Rscript \

${limma_voom_script} \

-C ${cont_cinfo} \

-R ${out_report1} \

-a ${anno_geneanno} \

-f ${input_fact_finfo} \

-m ${input_counts} \

-G 10 \

-P "i" \

-c 1 \

-d "BH" \

-l 0 \ <- `-j ""` removed

-n "TMM" \

-o ${out_report_files_path} \

-p 0.05 \

-s 0 \

-t 3 \

-z 0

"""

}

Modifying Outputs

Galaxy Tool Wrappers often allow you to generate extra outputs based on what the user wants.

For this tutorial, we’re not interested in any of the optional outputs - just the single outReport2 output.

Modifying outputs

Remove all the process outputs except outReport2.

Show Change

process LIMMA_VOOM {

container "quay.io/biocontainers/janis-translate-limma-voom-3.34.9.9"

input:

path anno_geneanno

path cont_cinfo

path input_counts

path input_fact_finfo

path limma_voom_script

path out_report1

val out_report_files_path

output:

path "outReport.html", emit: outReport2 <- other outputs removed

...

}

Your Nextflow process should now look similar to the following:

process LIMMA_VOOM {

container "quay.io/grace_hall1/limma-voom:3.50.1"

publishDir "./outputs"

input:

path anno_geneanno

path cont_cinfo

path input_counts

path input_fact_finfo

path limma_voom_script

val out_report1

val out_report_files_path

output:

path "outReport.html", emit: outReport2

script:

"""

Rscript \

${limma_voom_script} \

-C ${cont_cinfo} \

-R ${out_report1} \

-a ${anno_geneanno} \

-f ${input_fact_finfo} \

-m ${input_counts} \

-G 10 \

-P "i" \

-c 1 \

-d "BH" \

-l 0 \

-n "TMM" \

-o ${out_report_files_path} \

-p 0.05 \

-s 0 \

-t 3 \

-z 0

"""

}

Now that we have fixed up the process definition, we can set up nextflow.config and run the process with sample data to test.

Running Limma Voom as a Workflow¶

In this section we will run our translated LIMMA_VOOM process.

We will set up a workflow with a single LIMMA_VOOM task, and will supply inputs to this task using nextflow.config.

Setting up nextflow.config

To run this process, we will set up a nextflow.config file to supply inputs and other config.

Create a new file called nextflow.config in the limma_voom/ folder alongside limma_voom.nf.

Copy and paste the following code into your nextflow.config file.

nextflow.enable.dsl = 2

singularity.enabled = true

singularity.cacheDir = "$HOME/.singularity/cache"

params {

limma_voom_script = "/home2/training/limma_voom/limma_voom.R"

annotation_file = "/home2/training/data/sample_data/galaxy/limma_voom/anno.txt"

contrast_file = "/home2/training/data/sample_data/galaxy/limma_voom/contrasts.txt"

factor_file = "/home2/training/data/sample_data/galaxy/limma_voom/factorinfo.txt"

matrix_file = "/home2/training/data/sample_data/galaxy/limma_voom/matrix.txt"

html_path = "outReport.html"

output_path = "."

}

This tells nextflow to use singularity, and sets up input parameters for our sample data.

Creating Workflow & Passing Data

Now we have input data set up using the params global variable, we will add some lines to the top of limma_voom.nf to turn it into a workflow.

Copy and paste the following lines at the top of limma_voom.nf:

nextflow.enable.dsl = 2

workflow {

LIMMA_VOOM(

params.annotation_file, // anno_geneanno

params.contrast_file, // cont_cinfo

params.matrix_file, // input_counts

params.factor_file, // input_fact_finfo

params.limma_voom_script, // limma_voom_script

params.html_path, // out_report1

params.output_path, // out_report_files_path

)

}

The new workflow {} section declares the main workflow entry point.

When we run limma_voom.nf, nextflow will look for this section and run the workflow contained within.

In our case, the workflow only contains a single task, which runs the LIMMA_VOOM process defined below the workflow section. We are passing the inputs we set up as params in nextflow.config to feed values to the process inputs.

Running Our Workflow

Ensure you are in the limma_voom/ working directory, where nextflow.config and limma_voom.nf reside.

cd limma_voom

To run the workflow using our sample data, we can now write the following command:

nextflow run limma_voom.nf

Nextflow will automatically check if there is a nextflow.config file in the working directory, and if so will use that to configure itself. Our inputs are supplied in nextflow.config alongside the dsl2 & singularity config, so it should run without issue.

Viewing Results

If everything went well, we should see a new folder created called outputs/ which has a single outReport.html file inside.

If needed, you can check the data/final/galaxy/limma_voom folder as a reference.

outReport.html will be a symlink to the work folder where LIMMA_VOOM ran.

To see the symlink path, use the following command:

ls -lah outputs/outReport.html

The output should be similar to the following:

lrwxrwxrwx 1 training users 80 Jun 18 11:06 outputs/outReport.html ->

/home2/training/limma_voom/work/02/8cd12271346eb884083815bfe0cbfa/outReport.html

The actual file location is displayed after the ->, as this denotes a symlink.

If using VSC, open this directory to see the various data and pdfs produced by LIMMA_VOOM.

If using CLI, use the cd command instead, then ls -lah to print the contents of the directory.

You should see the following files & directories:

# directories

- glimma_MDS

- glimma_Mut-WT

- glimma_volcano_Mut-WT

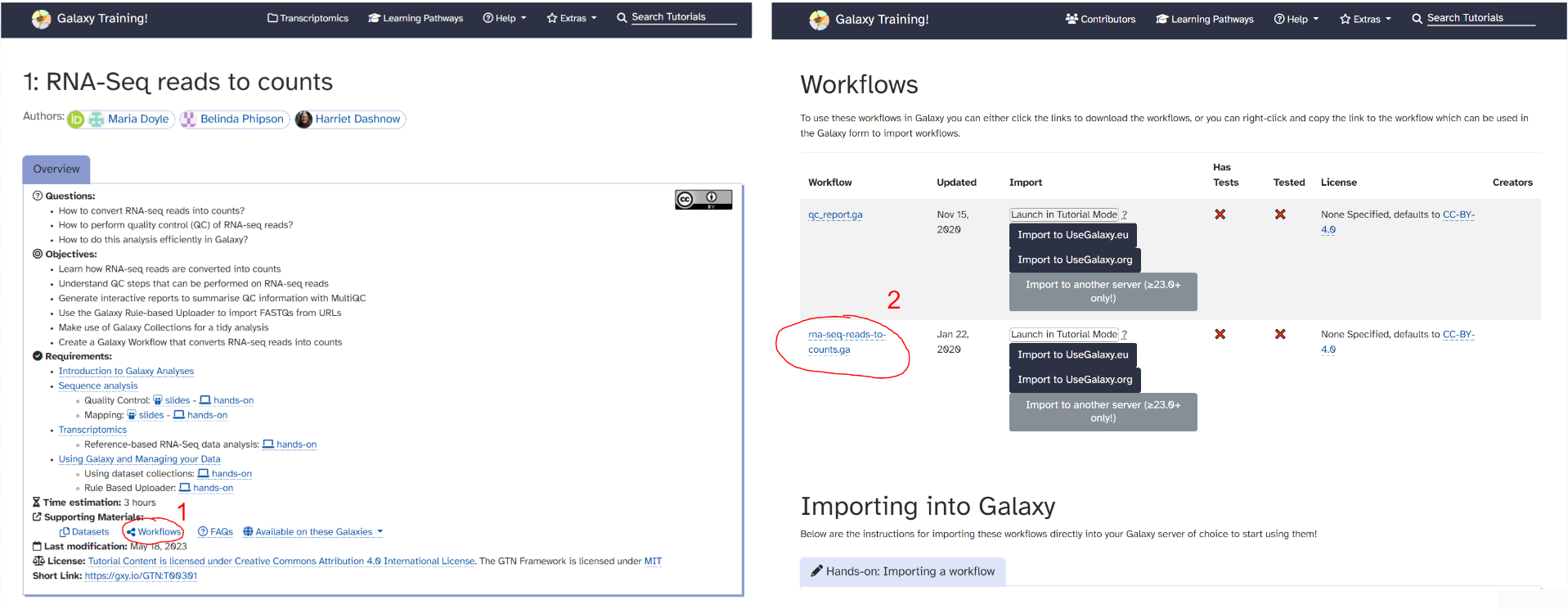

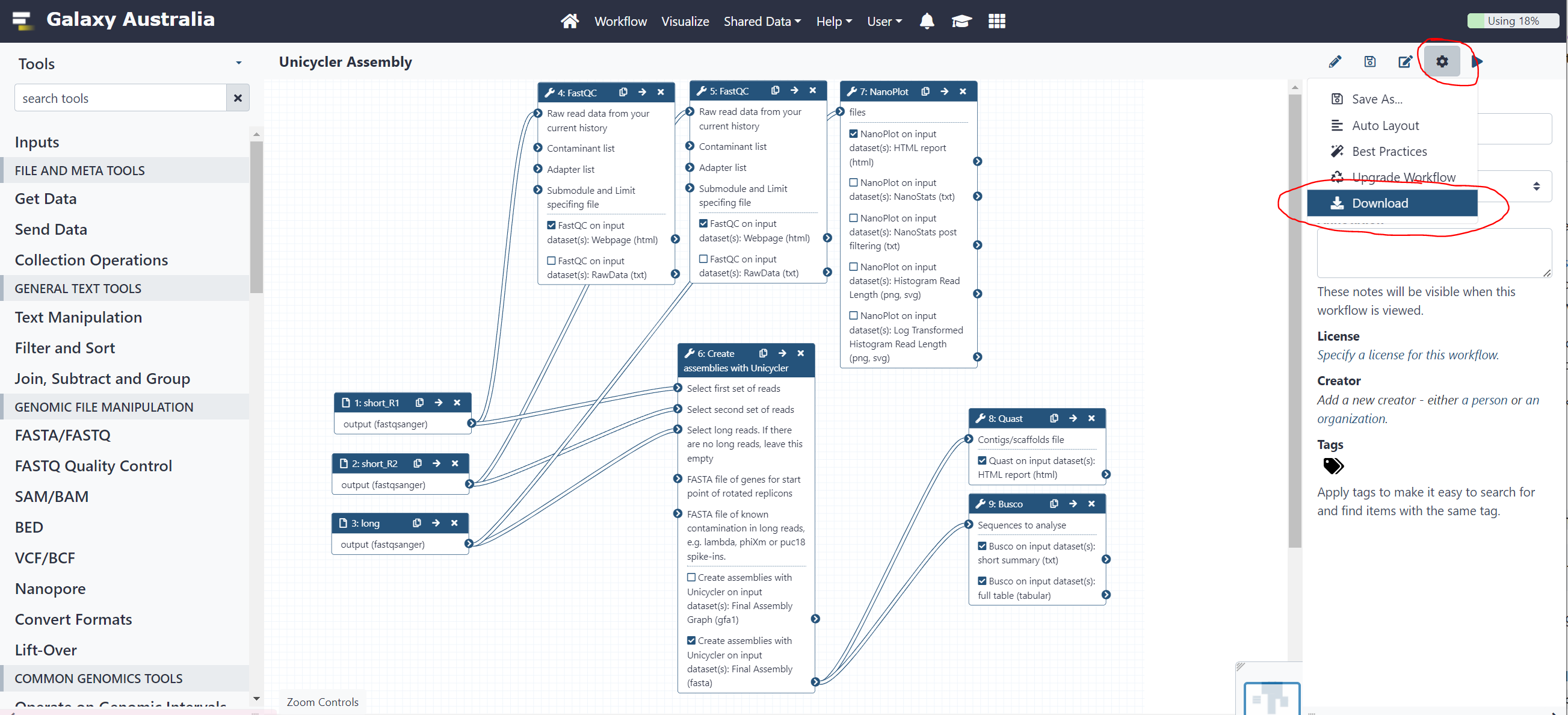

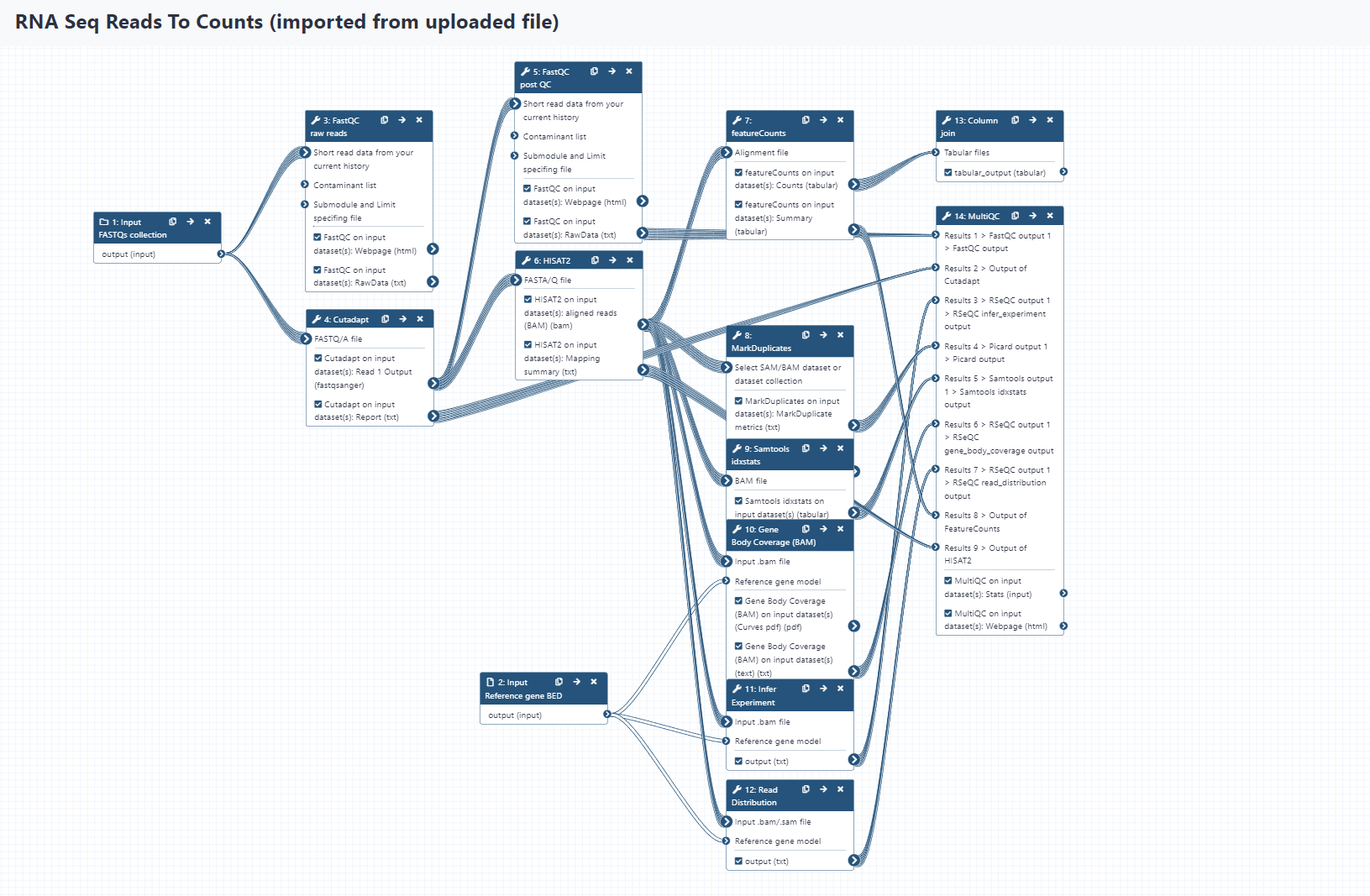

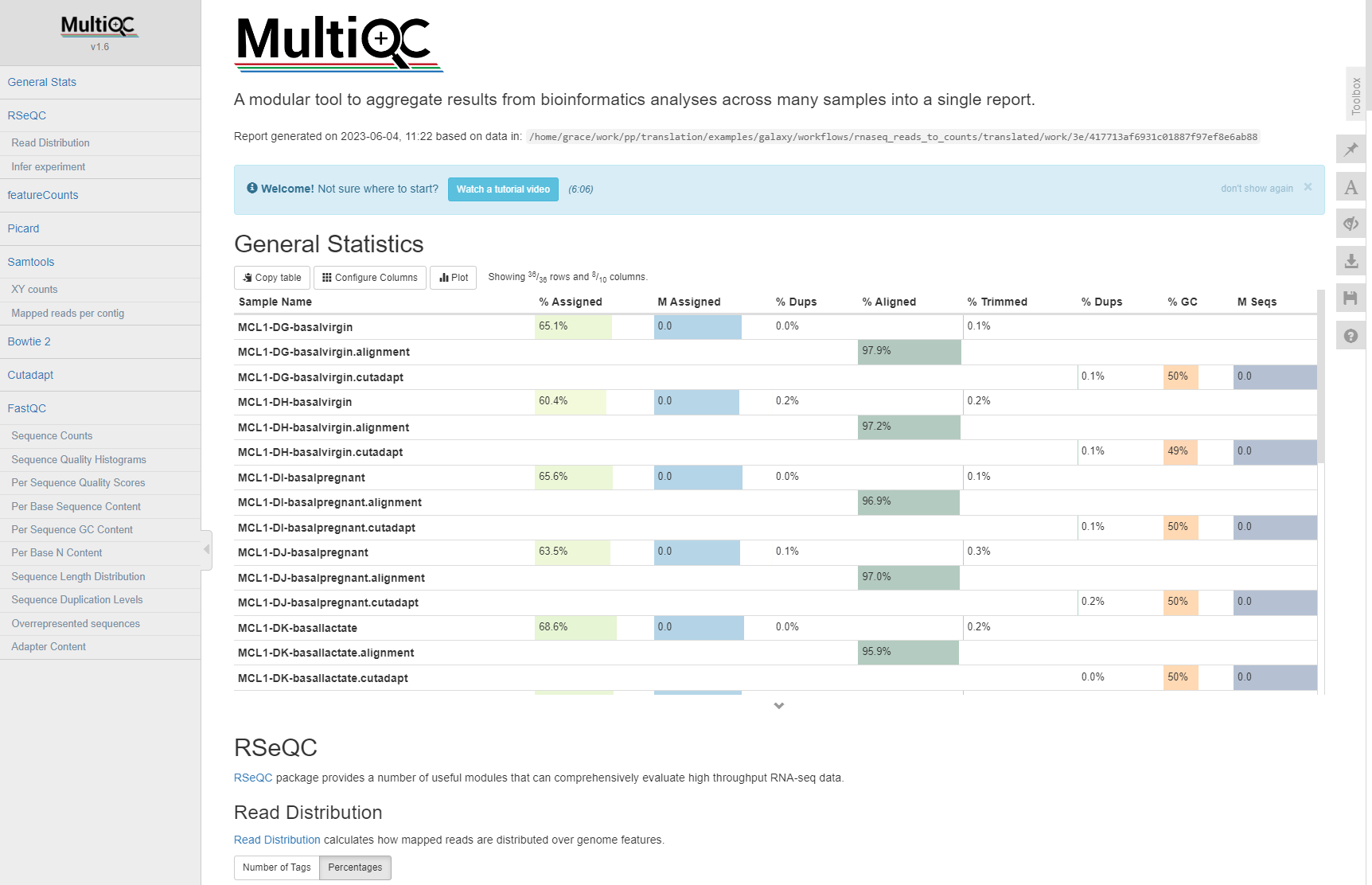

- glimma_WT-Mut